| Method | backbone | test size | VOC2007 | VOC2010 | VOC2012 | ILSVRC 2013 | MSCOCO 2015 | Speed |

|---|---|---|---|---|---|---|---|---|

| OverFeat | 24.3% | |||||||

| R-CNN | AlexNet | 58.5% | 53.7% | 53.3% | 31.4% | |||

| R-CNN | VGG16 | 66.0% | ||||||

| SPP_net | ZF-5 | 54.2% | 31.84% | |||||

| DeepID-Net | 64.1% | 50.3% | ||||||

| NoC | 73.3% | 68.8% | ||||||

| Fast-RCNN | VGG16 | 70.0% | 68.8% | 68.4% | 19.7%(@[0.5-0.95]), 35.9%(@0.5) | |||

| MR-CNN | 78.2% | 73.9% | ||||||

| Faster-RCNN | VGG16 | 78.8% | 75.9% | 21.9%(@[0.5-0.95]), 42.7%(@0.5) | 198ms | |||

| Faster-RCNN | ResNet101 | 85.6% | 83.8% | 37.4%(@[0.5-0.95]), 59.0%(@0.5) | ||||

| YOLO | 63.4% | 57.9% | 45 fps | |||||

| YOLO VGG-16 | 66.4% | 21 fps | ||||||

| YOLOv2 | 448x448 | 78.6% | 73.4% | 21.6%(@[0.5-0.95]), 44.0%(@0.5) | 40 fps | |||

| SSD | VGG16 | 300x300 | 77.2% | 75.8% | 25.1%(@[0.5-0.95]), 43.1%(@0.5) | 46 fps | ||

| SSD | VGG16 | 512x512 | 79.8% | 78.5% | 28.8%(@[0.5-0.95]), 48.5%(@0.5) | 19 fps | ||

| SSD | ResNet101 | 300x300 | 28.0%(@[0.5-0.95]) | 16 fps | ||||

| SSD | ResNet101 | 512x512 | 31.2%(@[0.5-0.95]) | 8 fps | ||||

| DSSD | ResNet101 | 300x300 | 28.0%(@[0.5-0.95]) | 8 fps | ||||

| DSSD | ResNet101 | 500x500 | 33.2%(@[0.5-0.95]) | 6 fps | ||||

| ION | 79.2% | 76.4% | ||||||

| CRAFT | 75.7% | 71.3% | 48.5% | |||||

| OHEM | 78.9% | 76.3% | 25.5%(@[0.5-0.95]), 45.9%(@0.5) | |||||

| R-FCN | ResNet50 | 77.4% | 0.12sec(K40), 0.09sec(TitianX) | |||||

| R-FCN | ResNet101 | 79.5% | 0.17sec(K40), 0.12sec(TitianX) | |||||

| R-FCN(ms train) | ResNet101 | 83.6% | 82.0% | 31.5%(@[0.5-0.95]), 53.2%(@0.5) | ||||

| PVANet 9.0 | 84.9% | 84.2% | 750ms(CPU), 46ms(TitianX) | |||||

| RetinaNet | ResNet101-FPN | |||||||

| Light-Head R-CNN | Xception* | 800/1200 | 31.5%@[0.5:0.95] | 95 fps | ||||

| Light-Head R-CNN | Xception* | 700/1100 | 30.7%@[0.5:0.95] | 102 fps |

Object Detection

Natural Language Processing

Tutorials

Practical Neural Networks for NLP

- intro: EMNLP 2016

- github: https://github.com/clab/dynet_tutorial_examples

Structured Neural Networks for NLP: From Idea to Code

- slides: https://github.com/neubig/yrsnlp-2016/blob/master/neubig16yrsnlp.pdf

- github: https://github.com/neubig/yrsnlp-2016

Understanding Deep Learning Models in NLP

http://nlp.yvespeirsman.be/blog/understanding-deeplearning-models-nlp/

Deep learning for natural language processing, Part 1

https://softwaremill.com/deep-learning-for-nlp/

Neural Models

Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models

- intro: NIPS 2014 deep learning workshop

- arxiv: http://arxiv.org/abs/1411.2539

- github: https://github.com/ryankiros/visual-semantic-embedding

- results: http://www.cs.toronto.edu/~rkiros/lstm_scnlm.html

- demo: http://deeplearning.cs.toronto.edu/i2t

Improved Semantic Representations From Tree-Structured Long Short-Term Memory Networks

- arxiv: http://arxiv.org/abs/1503.00075

- github: https://github.com/stanfordnlp/treelstm

- github(Theano): https://github.com/ofirnachum/tree_rnn

Visualizing and Understanding Neural Models in NLP

- arxiv: http://arxiv.org/abs/1506.01066

- github: https://github.com/jiweil/Visualizing-and-Understanding-Neural-Models-in-NLP

Character-Aware Neural Language Models

Skip-Thought Vectors

A Primer on Neural Network Models for Natural Language Processing

Character-aware Neural Language Models

Neural Variational Inference for Text Processing

- arxiv: http://arxiv.org/abs/1511.06038

- notes: http://dustintran.com/blog/neural-variational-inference-for-text-processing/

- github: https://github.com/carpedm20/variational-text-tensorflow

- github: https://github.com/cheng6076/NVDM

Sequence to Sequence Learning

Generating Text with Deep Reinforcement Learning

- intro: NIPS 2015

- arxiv: http://arxiv.org/abs/1510.09202

MUSIO: A Deep Learning based Chatbot Getting Smarter

- homepage: http://ec2-204-236-149-143.us-west-1.compute.amazonaws.com:9000/

- github(Torch7): https://github.com/deepcoord/seq2seq

Translation

Learning phrase representations using rnn encoder-decoder for statistical machine translation

- intro: GRU. EMNLP 2014

- arxiv: http://arxiv.org/abs/1406.1078

Neural Machine Translation by Jointly Learning to Align and Translate

- intro: ICLR 2015

- arxiv: http://arxiv.org/abs/1409.0473

- github: https://github.com/lisa-groundhog/GroundHog

Multi-Source Neural Translation

- intro: “report up to +4.8 Bleu increases on top of a very strong attention-based neural translation model.”

- arxiv: Multi-Source Neural Translation

- github(Zoph_RNN): https://github.com/isi-nlp/Zoph_RNN

- video: http://research.microsoft.com/apps/video/default.aspx?id=260336

Multi-Way, Multilingual Neural Machine Translation with a Shared Attention Mechanism

- arxiv: http://arxiv.org/abs/1601.01073

- github: https://github.com/nyu-dl/dl4mt-multi

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/multi-way-nmt-shared-attention.md

Modeling Coverage for Neural Machine Translation

A Character-level Decoder without Explicit Segmentation for Neural Machine Translation

NEMATUS: Attention-based encoder-decoder model for neural machine translation

Variational Neural Machine Translation

- intro: EMNLP 2016

- arxiv: https://arxiv.org/abs/1605.07869

- github: https://github.com/DeepLearnXMU/VNMT

Neural Network Translation Models for Grammatical Error Correction

Linguistic Input Features Improve Neural Machine Translation

Sequence-Level Knowledge Distillation

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1606.07947

- github: https://github.com/harvardnlp/nmt-android

Neural Machine Translation: Breaking the Performance Plateau

Tips on Building Neural Machine Translation Systems

Semi-Supervised Learning for Neural Machine Translation

- intro: ACL 2016. Tsinghua University & Baidu Inc

- arxiv: http://arxiv.org/abs/1606.04596

EUREKA-MangoNMT: A C++ toolkit for neural machine translation for CPU

Deep Character-Level Neural Machine Translation

Neural Machine Translation Implementations

Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation

Learning to Translate in Real-time with Neural Machine Translation

Is Neural Machine Translation Ready for Deployment? A Case Study on 30 Translation Directions

Fully Character-Level Neural Machine Translation without Explicit Segmentation

Navigational Instruction Generation as Inverse Reinforcement Learning with Neural Machine Translation

Neural Machine Translation in Linear Time

- intro: ByteNet

- arxiv: https://arxiv.org/abs/1610.10099

- github: https://github.com/paarthneekhara/byteNet-tensorflow

- github(Tensorflow): https://github.com/buriburisuri/ByteNet

Neural Machine Translation with Reconstruction

A Convolutional Encoder Model for Neural Machine Translation

- intro: ACL 2017. Facebook AI Research

- arxiv: https://arxiv.org/abs/1611.02344

- github: https://github.com//pravarmahajan/cnn-encoder-nmt

Toward Multilingual Neural Machine Translation with Universal Encoder and Decoder

MXNMT: MXNet based Neural Machine Translation

Doubly-Attentive Decoder for Multi-modal Neural Machine Translation

- intro: Dublin City University & Trinity College Dublin

- arxiv: https://arxiv.org/abs/1702.01287

Massive Exploration of Neural Machine Translation Architectures

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1703.03906

- github: https://github.com/google/seq2seq/

Depthwise Separable Convolutions for Neural Machine Translation

- intro: Google Brain & University of Toronto

- arxiv: https://arxiv.org/abs/1706.03059

Deep Architectures for Neural Machine Translation

- intro: WMT 2017 research track. University of Edinburgh & Charles University

- arxiv: https://arxiv.org/abs/1707.07631

- github: https://github.com/Avmb/deep-nmt-architectures

Marian: Fast Neural Machine Translation in C++

- intro: Microsoft & Adam Mickiewicz University in Poznan & University of Edinburgh

- homepage: https://marian-nmt.github.io/

- arxiv: https://arxiv.org/abs/1804.00344

- github: https://github.com/marian-nmt/marian

Sockeye

- intro: Sequence-to-sequence framework with a focus on Neural Machine Translation based on Apache MXNet

- arxiv: https://github.com/awslabs/sockeye/

Summarization

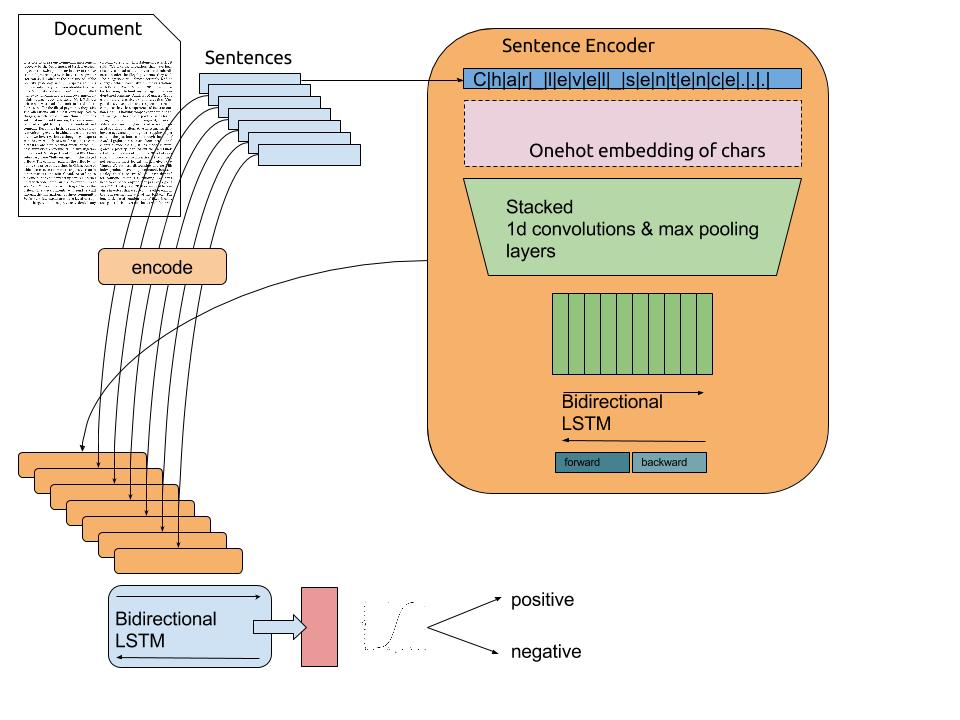

Extraction of Salient Sentences from Labelled Documents

- arxiv: http://arxiv.org/abs/1412.6815

- github: https://github.com/mdenil/txtnets

- notes: https://github.com/jxieeducation/DIY-Data-Science/blob/master/papernotes/2014/06/model-visualizing-summarising-conv-net.md

A Neural Attention Model for Abstractive Sentence Summarization

- intro: EMNLP 2015. Facebook AI Research

- arxiv: http://arxiv.org/abs/1509.00685

- github: https://github.com/facebook/NAMAS

- github(TensorFlow): https://github.com/carpedm20/neural-summary-tensorflow

A Convolutional Attention Network for Extreme Summarization of Source Code

- homepage: http://groups.inf.ed.ac.uk/cup/codeattention/

- arxiv: http://arxiv.org/abs/1602.03001

- github: https://github.com/jxieeducation/DIY-Data-Science/blob/master/papernotes/2016/02/conv-attention-network-source-code-summarization.md

Abstractive Text Summarization Using Sequence-to-Sequence RNNs and Beyond

- intro: BM Watson & Université de Montréal

- arxiv: http://arxiv.org/abs/1602.06023

textsum: Text summarization with TensorFlow

- blog: https://research.googleblog.com/2016/08/text-summarization-with-tensorflow.html

- github: https://github.com/tensorflow/models/tree/master/textsum

How to Run Text Summarization with TensorFlow

- blog: https://medium.com/@surmenok/how-to-run-text-summarization-with-tensorflow-d4472587602d#.mll1rqgjg

- github: https://github.com/surmenok/TextSum

Reading Comprehension

Text Comprehension with the Attention Sum Reader Network

Text Understanding with the Attention Sum Reader Network

- intro: ACL 2016

- arxiv: https://arxiv.org/abs/1603.01547

- github: https://github.com/rkadlec/asreader

A Thorough Examination of the CNN/Daily Mail Reading Comprehension Task

Consensus Attention-based Neural Networks for Chinese Reading Comprehension

- arxiv: http://arxiv.org/abs/1607.02250

- dataset(“HFL-RC”): http://hfl.iflytek.com/chinese-rc/

Separating Answers from Queries for Neural Reading Comprehension

Attention-over-Attention Neural Networks for Reading Comprehension

Teaching Machines to Read and Comprehend CNN News and Children Books using Torch

Reasoning with Memory Augmented Neural Networks for Language Comprehension

Bidirectional Attention Flow: Bidirectional Attention Flow for Machine Comprehension

- project page: https://allenai.github.io/bi-att-flow/

- github: https://github.com/allenai/bi-att-flow

NewsQA: A Machine Comprehension Dataset

- arxiv: https://arxiv.org/abs/1611.09830

- dataset: http://datasets.maluuba.com/NewsQA

- github: https://github.com/Maluuba/newsqa

Gated-Attention Readers for Text Comprehension

- intro: CMU

- arxiv: https://arxiv.org/abs/1606.01549

- github: https://github.com/bdhingra/ga-reader

Get To The Point: Summarization with Pointer-Generator Networks

- intro: ACL 2017. Stanford University & Google Brain

- arxiv: https://arxiv.org/abs/1704.04368

- github: https://github.com/abisee/pointer-generator

Language Understanding

Recurrent Neural Networks with External Memory for Language Understanding

- arxiv: http://arxiv.org/abs/1506.00195

- github: https://github.com/npow/RNN-EM

Neural Semantic Encoders

- intro: EACL 2017

- arxiv: https://arxiv.org/abs/1607.04315

- github(Keras): https://github.com/pdasigi/neural-semantic-encoders

Neural Tree Indexers for Text Understanding

- arxiv: https://arxiv.org/abs/1607.04492

- bitbucket: https://bitbucket.org/tsendeemts/nti/src

Better Text Understanding Through Image-To-Text Transfer

- intro: Google Brain & Technische Universität München

- arxiv: https://arxiv.org/abs/1705.08386

Text Classification

Convolutional Neural Networks for Sentence Classification

- intro: EMNLP 2014

- arxiv: http://arxiv.org/abs/1408.5882

- github(Theano): https://github.com/yoonkim/CNN_sentence

- github(Torch): https://github.com/harvardnlp/sent-conv-torch

- github(Keras): https://github.com/alexander-rakhlin/CNN-for-Sentence-Classification-in-Keras

- github(Tensorflow): https://github.com/abhaikollara/CNN-Sentence-Classification

Recurrent Convolutional Neural Networks for Text Classification

- paper: http://www.aaai.org/ocs/index.php/AAAI/AAAI15/paper/view/9745/9552

- github: https://github.com/knok/rcnn-text-classification

Character-level Convolutional Networks for Text Classification

- intro: NIPS 2015. “Text Understanding from Scratch”

- arxiv: http://arxiv.org/abs/1509.01626

- github: https://github.com/zhangxiangxiao/Crepe

- datasets: http://goo.gl/JyCnZq

- github(TensorFlow): https://github.com/mhjabreel/CharCNN

A C-LSTM Neural Network for Text Classification

Rationale-Augmented Convolutional Neural Networks for Text Classification

Text classification using DIGITS and Torch7

Recurrent Neural Network for Text Classification with Multi-Task Learning

Deep Multi-Task Learning with Shared Memory

- intro: EMNLP 2016

- arxiv: https://arxiv.org/abs/1609.07222

Virtual Adversarial Training for Semi-Supervised Text Classification

Adversarial Training Methods for Semi-Supervised Text Classification

- arxiv: http://arxiv.org/abs/1605.07725

- notes: https://github.com/dennybritz/deeplearning-papernotes/blob/master/notes/adversarial-text-classification.md

Sentence Convolution Code in Torch: Text classification using a convolutional neural network

Bag of Tricks for Efficient Text Classification

- intro: Facebook AI Research

- arxiv: http://arxiv.org/abs/1607.01759

- github: https://github.com/kemaswill/fasttext_torch

- github: https://github.com/facebookresearch/fastText

Actionable and Political Text Classification using Word Embeddings and LSTM

Implementing a CNN for Text Classification in TensorFlow

fancy-cnn: Multiparadigm Sequential Convolutional Neural Networks for text classification

Convolutional Neural Networks for Text Categorization: Shallow Word-level vs. Deep Character-level

Tweet Classification using RNN and CNN

Hierarchical Attention Networks for Document Classification

- intro: CMU & MSR. NAACL 2016

- paper: https://www.cs.cmu.edu/~diyiy/docs/naacl16.pdf

- github(TensorFlow): https://github.com/raviqqe/tensorflow-font2char2word2sent2doc

- github(TensorFlow): https://github.com/ematvey/deep-text-classifier

AC-BLSTM: Asymmetric Convolutional Bidirectional LSTM Networks for Text Classification

- arxiv: https://arxiv.org/abs/1611.01884

- github(MXNet): https://github.com/Ldpe2G/AC-BLSTM

Generative and Discriminative Text Classification with Recurrent Neural Networks

- intro: DeepMind

- arxiv: https://arxiv.org/abs/1703.01898

Adversarial Multi-task Learning for Text Classification

- intro: ACL 2017

- arxiv: https://arxiv.org/abs/1704.05742

- data: http://nlp.fudan.edu.cn/data/

Deep Text Classification Can be Fooled

- intro: Renmin University of China

- arxiv: https://arxiv.org/abs/1704.08006

Deep neural network framework for multi-label text classification

Multi-Task Label Embedding for Text Classification

- intro: Shanghai Jiao Tong University

- arxiv: https://arxiv.org/abs/1710.07210

Text Clustering

Self-Taught Convolutional Neural Networks for Short Text Clustering

- intro: Chinese Academy of Sciences. accepted for publication in Neural Networks

- arxiv: https://arxiv.org/abs/1701.00185

- github: https://github.com/jacoxu/STC2

Alignment

Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books

Dialog

Visual Dialog

- webiste: http://visualdialog.org/

- arxiv: https://arxiv.org/abs/1611.08669

- github: https://github.com/batra-mlp-lab/visdial-amt-chat

- github(Torch): https://github.com/batra-mlp-lab/visdial

- github(PyTorch): https://github.com/Cloud-CV/visual-chatbot

- demo: http://visualchatbot.cloudcv.org/

Papers, code and data from FAIR for various memory-augmented nets with application to text understanding and dialogue.

Neural Emoji Recommendation in Dialogue Systems

- intro: Tsinghua University & Baidu

- arxiv: https://arxiv.org/abs/1612.04609

Memory Networks

Neural Turing Machines

- paper: http://arxiv.org/abs/1410.5401

- Chs: http://www.jianshu.com/p/94dabe29a43b

- github: https://github.com/shawntan/neural-turing-machines

- github: https://github.com/DoctorTeeth/diffmem

- github: https://github.com/carpedm20/NTM-tensorflow

- blog: https://blog.aidangomez.ca/2016/05/16/The-Neural-Turing-Machine/

Memory Networks

- intro: Facebook AI Research

- arxiv: http://arxiv.org/abs/1410.3916

- github: https://github.com/npow/MemNN

End-To-End Memory Networks

- intro: Facebook AI Research

- intro: Continuous version of memory extraction via softmax. “Weakly supervised memory networks”

- arxiv: http://arxiv.org/abs/1503.08895

- github: https://github.com/facebook/MemNN

- github: https://github.com/vinhkhuc/MemN2N-babi-python

- github: https://github.com/npow/MemN2N

- github: https://github.com/domluna/memn2n

- github(Tensorflow): https://github.com/abhaikollara/MemN2N-Tensorflow

- video: http://research.microsoft.com/apps/video/default.aspx?id=259920&r=1

- video: http://pan.baidu.com/s/1pKiGLzP

Reinforcement Learning Neural Turing Machines - Revised

Learning to Transduce with Unbounded Memory

- intro: Google DeepMind

- arxiv: http://arxiv.org/abs/1506.02516

How to Code and Understand DeepMind’s Neural Stack Machine

- blog: https://iamtrask.github.io/2016/02/25/deepminds-neural-stack-machine/

- video tutorial: http://pan.baidu.com/s/1qX0EGDe

Ask Me Anything: Dynamic Memory Networks for Natural Language Processing

- intro: Memory networks implemented via rnns and gated recurrent units (GRUs).

- arxiv: http://arxiv.org/abs/1506.07285

- blog(“Implementing Dynamic memory networks”): http://yerevann.github.io//2016/02/05/implementing-dynamic-memory-networks/

- github(Python): https://github.com/swstarlab/DynamicMemoryNetworks

Ask Me Even More: Dynamic Memory Tensor Networks (Extended Model)

- intro: extensions for the Dynamic Memory Network (DMN)

- arxiv: https://arxiv.org/abs/1703.03939

- github: https://github.com/rgsachin/DMTN

Structured Memory for Neural Turing Machines

- intro: IBM Watson

- arxiv: http://arxiv.org/abs/1510.03931

Dynamic Memory Networks for Visual and Textual Question Answering

- intro: MetaMind 2016

- arxiv: http://arxiv.org/abs/1603.01417

- slides: http://slides.com/smerity/dmn-for-tqa-and-vqa-nvidia-gtc#/

- github: https://github.com/therne/dmn-tensorflow

- github(Theano): https://github.com/ethancaballero/Improved-Dynamic-Memory-Networks-DMN-plus

- review: https://www.technologyreview.com/s/600958/the-memory-trick-making-computers-seem-smarter/

- github(Tensorflow): https://github.com/DeepRNN/visual_question_answering

Neural GPUs Learn Algorithms

- arxiv: http://arxiv.org/abs/1511.08228

- github: https://github.com/tensorflow/models/tree/master/neural_gpu

- github: https://github.com/ikostrikov/torch-neural-gpu

- github: https://github.com/tristandeleu/neural-gpu

Hierarchical Memory Networks

Convolutional Residual Memory Networks

NTM-Lasagne: A Library for Neural Turing Machines in Lasagne

- github: https://github.com/snipsco/ntm-lasagne

- blog: https://medium.com/snips-ai/ntm-lasagne-a-library-for-neural-turing-machines-in-lasagne-2cdce6837315#.96pnh1m6j

Evolving Neural Turing Machines for Reward-based Learning

- homepage: http://sebastianrisi.com/entm/

- paper: http://sebastianrisi.com/wp-content/uploads/greve_gecco16.pdf

- code: https://www.dropbox.com/s/t019mwabw5nsnxf/neuralturingmachines-master.zip?dl=0

Hierarchical Memory Networks for Answer Selection on Unknown Words

- intro: COLING 2016

- arxiv: https://arxiv.org/abs/1609.08843

- github: https://github.com/jacoxu/HMN4QA

Gated End-to-End Memory Networks

Can Active Memory Replace Attention?

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1610.08613

A Taxonomy for Neural Memory Networks

- intro: University of Florida

- arxiv: https://arxiv.org/abs/1805.00327

Papers

Globally Normalized Transition-Based Neural Networks

- intro: speech tagging, dependency parsing and sentence compression

- arxiv: http://arxiv.org/abs/1603.06042

- github(SyntaxNet): https://github.com/tensorflow/models/tree/master/syntaxnet

A Decomposable Attention Model for Natural Language Inference

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1606.01933

- github(Keras+spaCy): https://github.com/explosion/spaCy/tree/master/examples/keras_parikh_entailment

Improving Recurrent Neural Networks For Sequence Labelling

Recurrent Memory Networks for Language Modeling

- arixv: http://arxiv.org/abs/1601.01272

- github: https://github.com/ketranm/RMN

Tweet2Vec: Learning Tweet Embeddings Using Character-level CNN-LSTM Encoder-Decoder

- intro: MIT Media Lab

- arixv: http://arxiv.org/abs/1607.07514

Learning text representation using recurrent convolutional neural network with highway layers

- intro: Neu-IR ‘16 SIGIR Workshop on Neural Information Retrieval

- arxiv: http://arxiv.org/abs/1606.06905

- github: https://github.com/wenying45/deep_learning_tutorial/tree/master/rcnn-hw

Ask the GRU: Multi-task Learning for Deep Text Recommendations

From phonemes to images: levels of representation in a recurrent neural model of visually-grounded language learning

- intro: COLING 2016

- arxiv: https://arxiv.org/abs/1610.03342

Visualizing Linguistic Shift

A Joint Many-Task Model: Growing a Neural Network for Multiple NLP Tasks

- intro: The University of Tokyo & Salesforce Research

- arxiv: https://arxiv.org/abs/1611.01587

Deep Learning applied to NLP

https://arxiv.org/abs/1703.03091

Attention Is All You Need

- intro: Google Brain & Google Research & University of Toronto

- intro: Just attention + positional encoding = state of the art

- arxiv: https://arxiv.org/abs/1706.03762

- github(Chainer): https://github.com/soskek/attention_is_all_you_need

Recent Trends in Deep Learning Based Natural Language Processing

- intro: Beijing Institute of Technology & National University of Singapore & Nanyang Technological University

- arxiv: https://arxiv.org/abs/1708.02709

HotFlip: White-Box Adversarial Examples for NLP

- intro: University of Oregon & Nanjing University

- arxiv: https://arxiv.org/abs/1712.06751

No Metrics Are Perfect: Adversarial Reward Learning for Visual Storytelling

- intro: ACL 2018

- arxiv: https://arxiv.org/abs/1804.09160

Interesting Applications

Data-driven HR - Résumé Analysis Based on Natural Language Processing and Machine Learning

sk_p: a neural program corrector for MOOCs

- intro: MIT

- intro: Using seq2seq to fix buggy code submissions in MOOCs

- arxiv: http://arxiv.org/abs/1607.02902

Neural Generation of Regular Expressions from Natural Language with Minimal Domain Knowledge

- intro: EMNLP 2016

- intro: translating natural language queries into regular expressions which embody their meaning

- arxiv: http://arxiv.org/abs/1608.03000

emoji2vec: Learning Emoji Representations from their Description

- intro: EMNLP 2016

- arxiv: http://arxiv.org/abs/1609.08359

Inside-Outside and Forward-Backward Algorithms Are Just Backprop (Tutorial Paper)

Cruciform: Solving Crosswords with Natural Language Processing

Smart Reply: Automated Response Suggestion for Email

- intro: Google. KDD 2016

- arxiv: https://arxiv.org/abs/1606.04870

- notes: https://blog.acolyer.org/2016/11/24/smart-reply-automated-response-suggestion-for-email/

Deep Learning for RegEx

- intro: a winning submission of Extraction of product attribute values competition (CrowdAnalytix)

- blog: http://dlacombejr.github.io/2016/11/13/deep-learning-for-regex.html

Learning Python Code Suggestion with a Sparse Pointer Network

- intro: Learning to Auto-Complete using RNN Language Models

- intro: University College London

- arxiv: https://arxiv.org/abs/1611.08307

- github: https://github.com/uclmr/pycodesuggest

End-to-End Prediction of Buffer Overruns from Raw Source Code via Neural Memory Networks

https://arxiv.org/abs/1703.02458

Convolutional Sequence to Sequence Learning

- arxiv: https://arxiv.org/abs/1705.03122

- paper: https://s3.amazonaws.com/fairseq/papers/convolutional-sequence-to-sequence-learning.pdf

- github: https://github.com/facebookresearch/fairseq

DeepFix: Fixing Common C Language Errors by Deep Learning

- intro: AAAI 2017. Indian Institute of Science

- project page: http://www.iisc-seal.net/deepfix

- paper: https://www.aaai.org/ocs/index.php/AAAI/AAAI17/paper/view/14603/13921

- bitbucket: https://bitbucket.org/iiscseal/deepfix

Hierarchically-Attentive RNN for Album Summarization and Storytelling

- intro: EMNLP 2017. UNC Chapel Hill

- arxiv: https://arxiv.org/abs/1708.02977

Project

TheanoLM - An Extensible Toolkit for Neural Network Language Modeling

NLP-Caffe: natural language processing with Caffe

DL4NLP: Deep Learning for Natural Language Processing

- github: https://github.com/nokuno/dl4nlp

Combining CNN and RNN for spoken language identification

- blog: http://yerevann.github.io//2016/06/26/combining-cnn-and-rnn-for-spoken-language-identification/

- github: https://github.com/YerevaNN/Spoken-language-identification/tree/master/theano

Character-Aware Neural Language Models: LSTM language model with CNN over characters in TensorFlow

Neural Relation Extraction with Selective Attention over Instances

- paper: http://nlp.csai.tsinghua.edu.cn/~lzy/publications/acl2016_nre.pdf

- github: https://github.com/thunlp/NRE

deep-simplification: Text simplification using RNNs

- intro: achieves a BLEU score of 61.14

- github: https://github.com/mbartoli/deep-simplification

lamtram: A toolkit for language and translation modeling using neural networks

Lango: Language Lego

- intro: Lango is a natural language processing library for working with the building blocks of language.

- github: https://github.com/ayoungprogrammer/Lango

Sequence-to-Sequence Learning with Attentional Neural Networks

- github(Torch): https://github.com/harvardnlp/seq2seq-attn

harvardnlp code

- intro: pen-source implementations of popular deep learning techniques with applications to NLP

- homepage: http://nlp.seas.harvard.edu/code/

Seq2seq: Sequence to Sequence Learning with Keras

debug seq2seq

Recurrent & convolutional neural network modules

- intro: This repo contains Theano implementations of popular neural network components and optimization methods.

- github: https://github.com/taolei87/rcnn

Datasets

Datasets for Natural Language Processing

Blogs

How to read: Character level deep learning

Heavy Metal and Natural Language Processing

- part 1: http://www.degeneratestate.org/posts/2016/Apr/20/heavy-metal-and-natural-language-processing-part-1/

Sequence To Sequence Attention Models In PyCNN

https://talbaumel.github.io/Neural+Attention+Mechanism.html

Source Code Classification Using Deep Learning

http://blog.aylien.com/source-code-classification-using-deep-learning/

My Process for Learning Natural Language Processing with Deep Learning

Convolutional Methods for Text

https://medium.com/@TalPerry/convolutional-methods-for-text-d5260fd5675f

Word2Vec

Word2Vec Tutorial - The Skip-Gram Model

http://mccormickml.com/2016/04/19/word2vec-tutorial-the-skip-gram-model/

Word2Vec Tutorial Part 2 - Negative Sampling

http://mccormickml.com/2017/01/11/word2vec-tutorial-part-2-negative-sampling/

Word2Vec Resources

http://mccormickml.com/2016/04/27/word2vec-resources/

Demos

AskImage.org - Deep Learning for Answering Questions about Images

- homepage: http://www.askimage.org/

Talks / Videos

Navigating Natural Language Using Reinforcement Learning

Resources

So, you need to understand language data? Open-source NLP software can help!

- blog: http://entopix.com/so-you-need-to-understand-language-data-open-source-nlp-software-can-help.html

Curated list of resources on building bots

Notes for deep learning on NLP

https://medium.com/@frank_chung/notes-for-deep-learning-on-nlp-94ddfcb45723#.iouo0v7m7

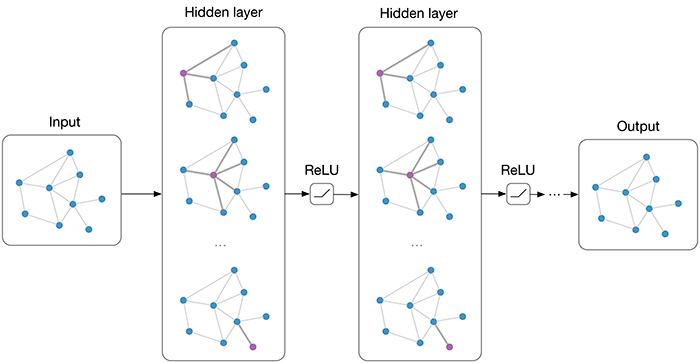

Graph Convolutional Networks

Learning Convolutional Neural Networks for Graphs

- intro: ICML 2016

- arxiv: http://arxiv.org/abs/1605.05273

Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering

- arxiv: https://arxiv.org/abs/1606.09375

- github: https://github.com/mdeff/cnn_graph

- github: https://github.com/pfnet-research/chainer-graph-cnn

Semi-Supervised Classification with Graph Convolutional Networks

- arxiv: http://arxiv.org/abs/1609.02907

- github: https://github.com/tkipf/gcn

- blog: http://tkipf.github.io/graph-convolutional-networks/

Graph Based Convolutional Neural Network

- intro: BMVC 2016

- arxiv: http://arxiv.org/abs/1609.08965

How powerful are Graph Convolutions? (review of Kipf & Welling, 2016)

http://www.inference.vc/how-powerful-are-graph-convolutions-review-of-kipf-welling-2016-2/

Graph Convolutional Networks

DeepGraph: Graph Structure Predicts Network Growth

Deep Learning with Sets and Point Clouds

- intro: CMU

- arxiv: https://arxiv.org/abs/1611.04500

Deep Learning on Graphs

Robust Spatial Filtering with Graph Convolutional Neural Networks

https://arxiv.org/abs/1703.00792

Modeling Relational Data with Graph Convolutional Networks

https://arxiv.org/abs/1703.06103

Distance Metric Learning using Graph Convolutional Networks: Application to Functional Brain Networks

- intro: Imperial College London

- arxiv: https://arxiv.org/abs/1703.02161

Deep Learning on Graphs with Graph Convolutional Networks

Deep Learning on Graphs with Keras

- intro:; Keras implementation of Graph Convolutional Networks

- github: https://github.com/tkipf/keras-gcn

Learning Graph While Training: An Evolving Graph Convolutional Neural Network

https://arxiv.org/abs/1708.04675

Graph Attention Networks

- intro: ICLR 2018

- intro: University of Cambridge & Centre de Visio per Computador, UAB & Montreal Institute for Learning Algorithms

- project page: http://petar-v.com/GAT/

- arxiv: https://arxiv.org/abs/1710.10903

- github: https://github.com/PetarV-/GAT

Residual Gated Graph ConvNets

https://arxiv.org/abs/1711.07553

Probabilistic and Regularized Graph Convolutional Networks

- intro: CMU

- arxiv: https://arxiv.org/abs/1803.04489

Videos as Space-Time Region Graphs

https://arxiv.org/abs/1806.01810

Relational inductive biases, deep learning, and graph networks

- intro: DeepMind & Google Brain & MIT & University of Edinburgh

- arxiv: https://arxiv.org/abs/1806.01261

Can GCNs Go as Deep as CNNs?

- project: https://sites.google.com/view/deep-gcns

- arxiv: https://arxiv.org/abs/1904.03751

- slides: https://docs.google.com/presentation/d/1L82wWymMnHyYJk3xUKvteEWD5fX0jVRbCbI65Cxxku0/edit#slide=id.p

- github(official, TensorFlow): https://github.com/lightaime/deep_gcns

GMNN: Graph Markov Neural Networks

- intro: ICML 2019

- ariv: https://arxiv.org/abs/1905.06214

- github: https://github.com/DeepGraphLearning/GMNN

DeepGCNs: Making GCNs Go as Deep as CNNs

- intro: ICCV 2019 Oral

- arxiv: https://arxiv.org/abs/1910.06849

- github: https://github.com/lightaime/deep_gcns_torch

- github: https://github.com/lightaime/deep_gcns

Rethinking pooling in graph neural networks

- intro: NeurIPS 2020

- arxiv: https://arxiv.org/abs/2010.11418

Generative Adversarial Networks

Generative Adversarial Networks

Generative Adversarial Nets

- arxiv: http://arxiv.org/abs/1406.2661

- paper: https://papers.nips.cc/paper/5423-generative-adversarial-nets.pdf

- github: https://github.com/goodfeli/adversarial

- github: https://github.com/aleju/cat-generator

Adversarial Feature Learning

- intro: ICLR 2017

- arxiv: https://arxiv.org/abs/1605.09782

- github: https://github.com/jeffdonahue/bigan

Generative Adversarial Networks

- intro: by Ian Goodfellow, NIPS 2016 tutorial

- arxiv: https://arxiv.org/abs/1701.00160

- slides: http://www.iangoodfellow.com/slides/2016-12-04-NIPS.pdf

- mirror: https://pan.baidu.com/s/1gfBNYW7

Adversarial Examples and Adversarial Training

- intro: NIPS 2016, Ian Goodfellow OpenAI

- slides: http://www.iangoodfellow.com/slides/2016-12-9-AT.pdf

How to Train a GAN? Tips and tricks to make GANs work

Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks

- intro: CatGAN

- arxiv: http://arxiv.org/abs/1511.06390

Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks

- intro: DCGAN

- arxiv: http://arxiv.org/abs/1511.06434

- github: https://github.com/jazzsaxmafia/dcgan_tensorflow

- github: https://github.com/Newmu/dcgan_code

- github: https://github.com/mattya/chainer-DCGAN

- github: https://github.com/soumith/dcgan.torch

- github: https://github.com/carpedm20/DCGAN-tensorflow

InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets

- arxiv: https://arxiv.org/abs/1606.03657

- github: https://github.com/openai/InfoGAN

- github(Tensorflow): https://github.com/buriburisuri/supervised_infogan

Learning Interpretable Latent Representations with InfoGAN: A tutorial on implementing InfoGAN in Tensorflow

- blog: https://medium.com/@awjuliani/learning-interpretable-latent-representations-with-infogan-dd710852db46#.r0kur3aum

- github: https://gist.github.com/awjuliani/c9ecd8b37d33d6855cd4ed9aa16ce89f#file-infogan-tutorial-ipynb

Coupled Generative Adversarial Networks

Energy-based Generative Adversarial Network

- intro: EBGAN

- author: Junbo Zhao, Michael Mathieu, Yann LeCun

- arxiv: http://arxiv.org/abs/1609.03126

- github(Tensorflow): https://github.com/buriburisuri/ebgan

SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient

Connecting Generative Adversarial Networks and Actor-Critic Methods

Generative Adversarial Nets from a Density Ratio Estimation Perspective

Unrolled Generative Adversarial Networks

Generative Adversarial Networks as Variational Training of Energy Based Models

Multi-class Generative Adversarial Networks with the L2 Loss Function

Least Squares Generative Adversarial Networks

Inverting The Generator Of A Generative Adversarial Networ

- intro: NIPS 2016 Workshop on Adversarial Training

- arxiv: https://arxiv.org/abs/1611.05644

ml4a-invisible-cities

- project page: https://opendot.github.io/ml4a-invisible-cities/

- arxiv: https://github.com/opendot/ml4a-invisible-cities

Semi-Supervised Learning with Context-Conditional Generative Adversarial Networks

Associative Adversarial Networks

- intro: NIPS 2016 Workshop on Adversarial Training

- arxiv: https://arxiv.org/abs/1611.06953

Temporal Generative Adversarial Nets

Handwriting Profiling using Generative Adversarial Networks

- intro: Accepted at The Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17 Student Abstract and Poster Program)

- arxiv: https://arxiv.org/abs/1611.08789

C-RNN-GAN: Continuous recurrent neural networks with adversarial training

- intro: Constructive Machine Learning Workshop (CML) at NIPS 2016

- project page: http://mogren.one/publications/2016/c-rnn-gan/

- arxiv: https://arxiv.org/abs/1611.09904

- github: https://github.com/olofmogren/c-rnn-gan

Ensembles of Generative Adversarial Networks

- intro: NIPS 2016 Workshop on Adversarial Training

- arxiv: https://arxiv.org/abs/1612.00991

Improved generator objectives for GANs

- intro: NIPS 2016 Workshop on Adversarial Training

- arxiv: https://arxiv.org/abs/1612.02780

Stacked Generative Adversarial Networks

- intro: SGAN

- arxiv: https://arxiv.org/abs/1612.04357

- github: https://github.com/xunhuang1995/SGAN

Unsupervised Pixel-Level Domain Adaptation with Generative Adversarial Networks

- intro: Google Brain & Google Research

- arxiv: https://arxiv.org/abs/1612.05424

AdaGAN: Boosting Generative Models

- intro: Max Planck Institute for Intelligent Systems & Google Brain

- arxiv: https://arxiv.org/abs/1701.02386

Towards Principled Methods for Training Generative Adversarial Networks

- intro: Courant Institute of Mathematical Sciences & Facebook AI Research

- arxiv: https://arxiv.org/abs/1701.04862

Wasserstein GAN

- intro: Courant Institute of Mathematical Sciences & Facebook AI Research

- arxiv: https://arxiv.org/abs/1701.07875

- github: https://github.com/martinarjovsky/WassersteinGAN

- github: https://github.com/Zardinality/WGAN-tensorflow

- github(Tensorflow/Keras): https://github.com/kuleshov/tf-wgan

- github: https://github.com/shekkizh/WassersteinGAN.tensorflow

- gist: https://gist.github.com/soumith/71995cecc5b99cda38106ad64503cee3

- reddit: https://www.reddit.com/r/MachineLearning/comments/5qxoaz/r_170107875_wasserstein_gan/

Improved Training of Wasserstein GANs

- intro: NIPS 2017

- arxiv: https://arxiv.org/abs/1704.00028

- github(TensorFlow): https://github.com/igul222/improved_wgan_training

- github: https://github.com/jalola/improved-wgan-pytorch

On the effect of Batch Normalization and Weight Normalization in Generative Adversarial Networks

On the Effects of Batch and Weight Normalization in Generative Adversarial Networks

Controllable Generative Adversarial Network

- intro: Korea University

- arxiv: https://arxiv.org/abs/1708.00598

Generative Adversarial Networks: An Overview

- intro: Imperial College London & Victoria University of Wellington & University of Montreal & Cortexica Vision Systems Ltd

- intro: IEEE Signal Processing Magazine Special Issue on Deep Learning for Visual Understanding

- arxiv: https://arxiv.org/abs/1710.07035

CyCADA: Cycle-Consistent Adversarial Domain Adaptation

https://arxiv.org/abs/1711.03213

Spectral Normalization for Generative Adversarial Networks

https://openreview.net/forum?id=B1QRgziT-

Are GANs Created Equal? A Large-Scale Study

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1711.10337

- reddit: https://www.reddit.com/r/MachineLearning/comments/7gwip3/d_googles_large_scale_gantuning_paper_unfairly/

GAGAN: Geometry-Aware Generative Adverserial Networks

https://arxiv.org/abs/1712.00684

CycleGAN: a Master of Steganography

- intro: NIPS 2017, workshop on Machine Deception

- arxiv: https://arxiv.org/abs/1712.02950

PacGAN: The power of two samples in generative adversarial networks

- intro: CMU & University of Illinois at Urbana-Champaign

- arxiv: https://arxiv.org/abs/1712.04086

ComboGAN: Unrestrained Scalability for Image Domain Translation

Decoupled Learning for Conditional Adversarial Networks

https://arxiv.org/abs/1801.06790

No Modes left behind: Capturing the data distribution effectively using GANs

- intro: AAAI 2018

- arxiv: https://arxiv.org/abs/1802.00771

Improving GAN Training via Binarized Representation Entropy (BRE) Regularization

- intro: ICLR 2018

- arxiv: https://arxiv.org/abs/1805.03644

- github: https://github.com/BorealisAI/bre-gan

On GANs and GMMs

https://arxiv.org/abs/1805.12462

The Unusual Effectiveness of Averaging in GAN Training

https://arxiv.org/abs/1806.04498

Understanding the Effectiveness of Lipschitz Constraint in Training of GANs via Gradient Analysis

https://arxiv.org/abs/1807.00751

The GAN Landscape: Losses, Architectures, Regularization, and Normalization

- intro: Google Brain

- arxiv: https://arxiv.org/abs/1807.04720

- github: https://github.com/google/compare_gan

Which Training Methods for GANs do actually Converge?

- intro: ICML 2018. MPI Tübingen & Microsoft Research

- project page: https://avg.is.tuebingen.mpg.de/publications/meschedericml2018

- paper: https://avg.is.tuebingen.mpg.de/uploads_file/attachment/attachment/424/Mescheder2018ICML.pdf

- github: https://github.com/LMescheder/GAN_stability

Convergence Problems with Generative Adversarial Networks (GANs)

- intro: University of Oxford

- arxiv: https://arxiv.org/abs/1806.11382

Bayesian CycleGAN via Marginalizing Latent Sampling

https://arxiv.org/abs/1811.07465

GAN Dissection: Visualizing and Understanding Generative Adversarial Networks

https://arxiv.org/abs/1811.10597

Do GAN Loss Functions Really Matter?

https://arxiv.org/abs/1811.09567

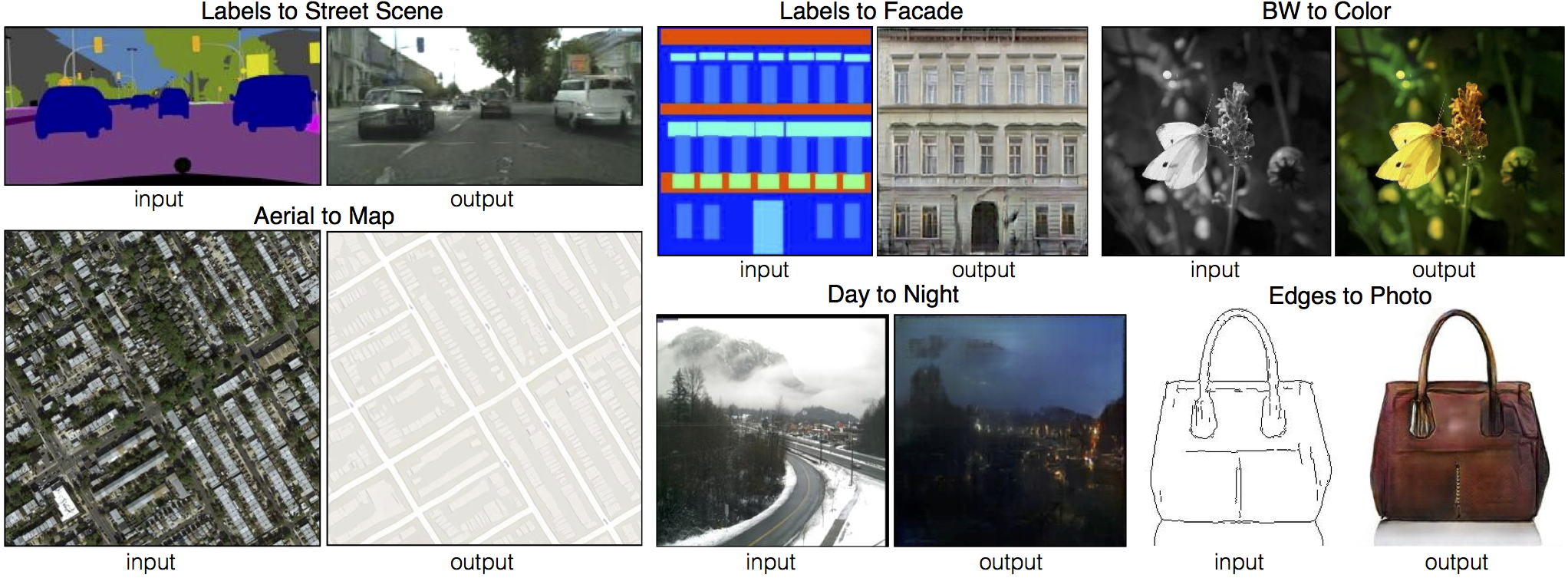

Image-to-Image Translation

Pix2Pix

Image-to-Image Translation with Conditional Adversarial Networks

- intro: CVPR 2017

- project page: https://phillipi.github.io/pix2pix/

- arxiv: https://arxiv.org/abs/1611.07004

- github: https://github.com/phillipi/pix2pix

- github(TensorFlow): https://github.com/yenchenlin/pix2pix-tensorflow

- github(Chainer): https://github.com/mattya/chainer-pix2pix

- github(PyTorch): https://github.com/mrzhu-cool/pix2pix-pytorch

- github(Chainer): https://github.com/wuhuikai/chainer-pix2pix

Remastering Classic Films in Tensorflow with Pix2Pix

- blog: https://hackernoon.com/remastering-classic-films-in-tensorflow-with-pix2pix-f4d551fa0503#.6dmahnt8n

- github: https://github.com/awjuliani/Pix2Pix-Film

- model: https://drive.google.com/file/d/0B8x0IeJAaBccNFVQMkQ0QW15TjQ/view

Image-to-Image Translation in Tensorflow

- blog: http://affinelayer.com/pix2pix/index.html

- github: https://github.com/affinelayer/pix2pix-tensorflow

webcam pix2pix

https://github.com/memo/webcam-pix2pix-tensorflow

Unsupervised Image-to-Image Translation with Generative Adversarial Networks

- intro: Imperial College London & Indian Institute of Technology

- arxiv: https://arxiv.org/abs/1701.02676

Unsupervised Image-to-Image Translation Networks

- intro: NIPS 2017 Spotlight

- intro: unsupervised/unpaired image-to-image translation using coupled GANs

- project page: http://research.nvidia.com/publication/2017-12_Unsupervised-Image-to-Image-Translation

- arxiv: https://arxiv.org/abs/1703.00848

- github: https://github.com/mingyuliutw/UNIT

Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks

- intro: UC Berkeley

- project page: https://junyanz.github.io/CycleGAN/

- arxiv: https://arxiv.org/abs/1703.10593

- github(official, Torch): https://github.com/junyanz/CycleGAN

- github(official, PyTorch): https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

- github(PyTorch): https://github.com/eveningglow/semi-supervised-CycleGAN

- github(Chainer): https://github.com/Aixile/chainer-cyclegan

CycleGAN and pix2pix in PyTorch

- intro: Image-to-image translation in PyTorch (e.g. horse2zebra, edges2cats, and more)

- github: https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix

Perceptual Adversarial Networks for Image-to-Image Transformation

https://arxiv.org/abs/1706.09138

XGAN: Unsupervised Image-to-Image Translation for many-to-many Mappings

- intro: IST Austria & Google Brain & Google Research

- arxiv: https://arxiv.org/abs/1711.05139

In2I : Unsupervised Multi-Image-to-Image Translation Using Generative Adversarial Networks

https://arxiv.org/abs/1711.09334

StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation

- intro: Korea University & Clova AI Research

- arxiv: https://arxiv.org/abs/1711.09020

- github: https://github.com//yunjey/StarGAN

Discriminative Region Proposal Adversarial Networks for High-Quality Image-to-Image Translation

https://arxiv.org/abs/1711.09554

Toward Multimodal Image-to-Image Translation

- intro: NIPS 2017. BicycleGAN

- project page: https://junyanz.github.io/BicycleGAN/

- arxiv: https://arxiv.org/abs/1711.11586

- github(official, PyTorch): https://github.com//junyanz/BicycleGAN

- github: https://github.com/gitlimlab/BicycleGAN-Tensorflow

- github: https://github.com/kvmanohar22/img2imgGAN

- github: https://github.com/eveningglow/BicycleGAN-pytorch

Face Translation between Images and Videos using Identity-aware CycleGAN

https://arxiv.org/abs/1712.00971

Unsupervised Multi-Domain Image Translation with Domain-Specific Encoders/Decoders

https://arxiv.org/abs/1712.02050

High-Resolution Image Synthesis and Semantic Manipulation with Conditional GANs

- intro: NVIDIA Corporation, UC Berkeley

- project page: https://tcwang0509.github.io/pix2pixHD/

- arxiv: https://arxiv.org/abs/1711.11585

- github: https://github.com/NVIDIA/pix2pixHD

- youtube: https://www.youtube.com/watch?v=3AIpPlzM_qs&feature=youtu.be

On the Effectiveness of Least Squares Generative Adversarial Networks

https://arxiv.org/abs/1712.06391

GANs for Limited Labeled Data

- intro: Ian Goodfellow

- slides: http://www.iangoodfellow.com/slides/2017-12-09-label.pdf

Defending Against Adversarial Examples

- intro: Ian Goodfellow

- slides: http://www.iangoodfellow.com/slides/2017-12-08-defending.pdf

Conditional Image-to-Image Translation

- intro: CVPR 2018

- arxiv: https://arxiv.org/abs/1805.00251

XOGAN: One-to-Many Unsupervised Image-to-Image Translation

https://arxiv.org/abs/1805.07277

Unsupervised Attention-guided Image to Image Translation

https://arxiv.org/abs/1806.02311

Exemplar Guided Unsupervised Image-to-Image Translation

https://arxiv.org/abs/1805.11145

Improving Shape Deformation in Unsupervised Image-to-Image Translation

https://arxiv.org/abs/1808.04325

Video-to-Video Synthesis

Segmentation Guided Image-to-Image Translation with Adversarial Networks

https://arxiv.org/abs/1901.01569

Projects

Generative Adversarial Networks with Keras

Generative Adversarial Network Demo for Fresh Machine Learning #2

- youtube: https://www.youtube.com/watch?v=deyOX6Mt_As&feature=em-uploademail

- github: https://github.com/llSourcell/Generative-Adversarial-Network-Demo

- demo: http://cs.stanford.edu/people/karpathy/gan/

TextGAN: A generative adversarial network for text generation, written in TensorFlow.

cleverhans v0.1: an adversarial machine learning library

Deep Convolutional Variational Autoencoder w/ Adversarial Network

- intro: An implementation of the deep convolutional generative adversarial network, combined with a varational autoencoder

- github: https://github.com/staturecrane/dcgan_vae_torch

A versatile GAN(generative adversarial network) implementation. Focused on scalability and ease-of-use.

AdaGAN: Boosting Generative Models

- intro: AdaGAN: greedy iterative procedure to train mixtures of GANs

- intro: Max Planck Institute for Intelligent Systems & Google Brain

- arxiv: https://arxiv.org/abs/1701.02386

- github: https://github.com/tolstikhin/adagan

TensorFlow-GAN (TFGAN)

- intro: TFGAN: A Lightweight Library for Generative Adversarial Networks

- github: https://github.com//tensorflow/tensorflow/tree/master/tensorflow/contrib/gan

- blog: https://research.googleblog.com/2017/12/tfgan-lightweight-library-for.html

Blogs

Generative Adversial Networks Explained

Generative Adversarial Autoencoders in Theano

- blog: https://swarbrickjones.wordpress.com/2016/01/24/generative-adversarial-autoencoders-in-theano/

- github: https://github.com/mikesj-public/dcgan-autoencoder

An introduction to Generative Adversarial Networks (with code in TensorFlow)

- blog: http://blog.aylien.com/introduction-generative-adversarial-networks-code-tensorflow/

- github: https://github.com/AYLIEN/gan-intro

Difficulties training a Generative Adversarial Network

Are Energy-Based GANs any more energy-based than normal GANs?

http://www.inference.vc/are-energy-based-gans-actually-energy-based/

Generative Adversarial Networks Explained with a Classic Spongebob Squarepants Episode: Plus a Tensorflow tutorial for implementing your own GAN

- blog: https://medium.com/@awjuliani/generative-adversarial-networks-explained-with-a-classic-spongebob-squarepants-episode-54deab2fce39#.rpiunhdjh

- gist: https://gist.github.com/awjuliani/8ebf356d03ffee139659807be7fa2611

Deep Learning Research Review Week 1: Generative Adversarial Nets

Stability of Generative Adversarial Networks

Instance Noise: A trick for stabilising GAN training

Generating Fine Art in 300 Lines of Code

- intro: DCGAN

- blog: https://medium.com/@richardherbert/generating-fine-art-in-300-lines-of-code-4d37218216a6#.63qm8ef9g

Talks / Videos

Generative Adversarial Network visualization

Resources

The GAN Zoo

- intro: A list of all named GANs!

- github: https://github.com/hindupuravinash/the-gan-zoo

AdversarialNetsPapers: The classical Papers about adversial nets

GAN Timeline

- intro: A timeline showing the development of Generative Adversarial Networks (GAN)

- github: https://github.com//dongb5/GAN-Timeline